What is the Cluster API Provider CloudStack

The Cluster API brings declarative, Kubernetes-style APIs to cluster creation, configuration and management.

The API itself is shared across multiple cloud providers allowing for true Apache CloudStack hybrid deployments of Kubernetes. It is built atop the lessons learned from previous cluster managers such as kops and kubicorn.

Launching a Kubernetes cluster on Apache CloudStack

Check out the Getting Started Guide to create your first Kubernetes cluster on Apache CloudStack using Cluster API.

Features

- Native Kubernetes manifests and API

- Choice of Linux distribution (as long as a current cloud-init is available). Tested on Ubuntu, Centos, Rocky and RHEL

- Support for single and multi-node control plane clusters

- Deploy clusters on Isolated and Shared Networks

- cloud-init based nodes bootstrapping

Compatibility with Cluster API and Kubernetes Versions

This provider’s versions are able to install and manage the following versions of Kubernetes:

| Kubernetes Version | v1.22 | v1.23 | v1.24 | v1.25 | v1.26 | v1.27 | v1.28 | v1.29 | v1.30 | v1.31 | v1.32 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CloudStack Provider (v0.4) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||

| CloudStack Provider (v0.6) | ✓ | ✓ | ✓ | ✓ | ✓ |

Note: The above matrix is based on what has been tested. Provider could work with older and newer versions of Kubernetes but it has not been tested.

Compatibility with Apache CloudStack Versions

This provider’s versions are able to work on the following versions of Apache CloudStack:

| CloudStack Version | 4.14 | 4.15 | 4.16 | 4.17 | 4.18 | 4.19 | 4.20 |

|---|---|---|---|---|---|---|---|

| CloudStack Provider (v0.4) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| CloudStack Provider (v0.6) | ✓ | ✓ |

Note: The above matrix is based on what has been tested. Provider could work with older and newer versions of Apache CloudStack but it has not been tested.

Operating system images

Note: Cluster API Provider CloudStack relies on a few prerequisites which have to be already installed in the used operating system images, e.g. a container runtime, kubelet, kubeadm, etc. Reference images can be found in kubernetes-sigs/image-builder.

Prebuilt images can be found below :

| Hypervisor | Kubernetes Version | Rocky Linux 9 | Ubuntu 22.04 | Ubuntu 24.04 |

|---|---|---|---|---|

| KVM | v1.28 | qcow2, md5 | qcow2, md5 | qcow2, md5 |

| v1.29 | qcow2, md5 | qcow2, md5 | qcow2, md5 | |

| v1.30 | qcow2, md5 | qcow2, md5 | qcow2, md5 | |

| v1.31 | qcow2, md5 | qcow2, md5 | qcow2, md5 | |

| v1.32 | qcow2, md5 | qcow2, md5 | qcow2, md5 | |

| VMware | v1.28 | ova, md5 | ova, md5 | ova, md5 |

| v1.29 | ova, md5 | ova, md5 | ova, md5 | |

| v1.30 | ova, md5 | ova, md5 | ova, md5 | |

| v1.31 | ova, md5 | ova, md5 | ova, md5 | |

| v1.32 | ova, md5 | ova, md5 | ova, md5 | |

| XenServer | v1.28 | vhd, md5 | vhd, md5 | vhd, md5 |

| v1.29 | vhd, md5 | vhd, md5 | vhd, md5 | |

| v1.30 | vhd, md5 | vhd, md5 | vhd, md5 | |

| v1.31 | vhd, md5 | vhd, md5 | vhd, md5 | |

| v1.32 | vhd, md5 | vhd, md5 | vhd, md5 |

Past images

| Hypervisor | Kubernetes Version | Rocky Linux 8 | Ubuntu 20.04 |

|---|---|---|---|

| KVM | v1.22 | qcow2, md5 | qcow2, md5 |

| v1.23 | qcow2, md5 | qcow2, md5 | |

| v1.24 | qcow2, md5 | qcow2, md5 | |

| v1.25 | qcow2, md5 | qcow2, md5 | |

| v1.26 | qcow2, md5 | qcow2, md5 | |

| v1.27 | qcow2, md5 | qcow2, md5 | |

| VMware | v1.22 | ova, md5 | ova, md5 |

| v1.23 | ova, md5 | ova, md5 | |

| v1.24 | ova, md5 | ova, md5 | |

| v1.25 | ova, md5 | ova, md5 | |

| v1.26 | ova, md5 | ova, md5 | |

| v1.27 | ova, md5 | ova, md5 | |

| XenServer | v1.22 | vhd, md5 | vhd, md5 |

| v1.23 | vhd, md5 | vhd, md5 | |

| v1.24 | vhd, md5 | vhd, md5 | |

| v1.25 | vhd, md5 | vhd, md5 | |

| v1.26 | vhd, md5 | vhd, md5 | |

| v1.27 | vhd, md5 | vhd, md5 |

Getting involved and contributing

Are you interested in contributing to cluster-api-provider-cloudstack? We, the maintainers and community, would love your suggestions, contributions, and help! Also, the maintainers can be contacted at any time to learn more about how to get involved:

Code of conduct

Participation in the Kubernetes community is governed by the Kubernetes Code of Conduct.

Github issues

Bugs

If you think you have found a bug please follow the instructions below.

- Please spend a small amount of time giving due diligence to the issue tracker. Your issue might be a duplicate.

- Get the logs from the cluster controllers. Please paste this into your issue.

- Open a new issue.

- Remember that users might be searching for your issue in the future, so please give it a meaningful title to help others.

- Feel free to reach out to the Cluster API community on the Kubernetes Slack.

Tracking new features

We also use the issue tracker to track features. If you have an idea for a feature, or think you can help Cluster API Provider CloudStack become even more awesome follow the steps below.

- Open a new issue.

- Remember that users might be searching for your issue in the future, so please give it a meaningful title to help others.

- Clearly define the use case, using concrete examples.

- Some of our larger features will require some design. If you would like to include a technical design for your feature, please include it in the issue.

- After the new feature is well understood, and the design agreed upon, we can start coding the feature. We would love for you to code it. So please open up a WIP (work in progress) pull request, and happy coding.

Our Contributors

Thank you to all contributors and a special thanks to our current maintainers & reviewers:

| Maintainers | Reviewers |

|---|---|

| @rohityadavcloud | @rohityadavcloud |

| @weizhouapache | @weizhouapache |

| @vishesh92 | @vishesh92 |

| @davidjumani | @davidjumani |

| @jweite-amazon | @jweite-amazon |

All the CAPC contributors:

Getting Started

Prerequisites

-

Follow the instructions here to install the following tools:

- kubectl

- clusterctl (Requires v1.1.5 +)

Optional if you do not have an existing Kubernetes cluster

-

Register the capi-compatible templates in your Apache CloudStack installation.

- Prebuilt images can be found here

- To build a compatible image see CloudStack CAPI Images

-

Create a management cluster. This can either be :

-

An existing Kubernetes cluster: For production use-cases a “real” Kubernetes cluster should be used with appropriate backup and DR policies and procedures in place. The Kubernetes cluster must be at least v1.19.1.

-

A local cluster created with

kind, for non production usekind create cluster

-

-

Set up Apache CloudStack credentials as a secret in the management cluster

- Create a file named

cloud-config.yamlin the repo’s root directory, substituting in your own environment’s valuesapiVersion: v1 kind: Secret metadata: name: cloudstack-credentials namespace: default type: Opaque stringData: api-key: <cloudstackApiKey> secret-key: <cloudstackSecretKey> api-url: <cloudstackApiUrl> verify-ssl: "false" - Apply this secret to the management cluster:

- With the management cluster’s KUBECONFIG in effect:

kubectl apply -f cloud-config.yaml

- With the management cluster’s KUBECONFIG in effect:

- Delete cloud-config.yaml when done, for security

- Create a file named

Initialize the management cluster

Run the following command to turn your cluster into a management cluster and load the Apache CloudStack components into it.

CAPC_CLOUDSTACKMACHINE_CKS_SYNC=true clusterctl init --infrastructure cloudstack

Integration of CAPC with CKS is supported for Apache CloudStack version 4.19 and above. If you wish to disable syncing of CAPC resources with cloudstack, set the environment variable

CAPC_CLOUDSTACKMACHINE_CKS_SYNC=falsebefore initializing the cloudstack provider. Or setenable-cloudstack-cks-synctofalsein the deployment spec for capc-controller-manager.

Creating a CAPC Cluster:

-

Set up the environment variables. It will be populated by the values set here. See the example values below (and replace with your own!)

The entire list of configuration variables as well as how to fetch them can be found here

# The Apache CloudStack zone in which the cluster is to be deployed export CLOUDSTACK_ZONE_NAME=zone1 # If the referenced network doesn't exist, a new isolated network # will be created. export CLOUDSTACK_NETWORK_NAME=GuestNet1 # The IP you put here must be available as an unused public IP on the network # referenced above. If it's not available, the control plane will fail to create. # You can see the list of available IP's when you try allocating a public # IP in the network at # Network -> Guest Networks -> <Network Name> -> IP Addresses export CLUSTER_ENDPOINT_IP=192.168.1.161 # This is the standard port that the Control Plane process runs on export CLUSTER_ENDPOINT_PORT=6443 # Machine offerings must be pre-created. Control plane offering # must have have >2GB RAM available export CLOUDSTACK_CONTROL_PLANE_MACHINE_OFFERING="Large Instance" export CLOUDSTACK_WORKER_MACHINE_OFFERING="Small Instance" # Referring to a prerequisite capi-compatible image you've loaded into Apache CloudStack export CLOUDSTACK_TEMPLATE_NAME=kube-v1.23.3/ubuntu-2004 # The SSH KeyPair to log into the VM (Optional: you must use clusterctl --flavor *managed-ssh*) export CLOUDSTACK_SSH_KEY_NAME=CAPCKeyPair6 # Sync resources created by CAPC in Apache Cloudstack CKS. Default is false. # Requires setting CAPC_CLOUDSTACKMACHINE_CKS_SYNC=true before initialising the cloudstack provider. # Or set enable-cloudstack-cks-sync to true in the deployment for capc-controller. export CLOUDSTACK_SYNC_WITH_ACS=true -

Generate the CAPC cluster spec yaml file

clusterctl generate cluster capc-cluster \ --kubernetes-version v1.23.3 \ --control-plane-machine-count=1 \ --worker-machine-count=1 \ > capc-cluster-spec.yaml -

Apply the CAPC cluster spec to your kind management cluster

kubectl apply -f capc-cluster-spec.yaml -

Check the progress of capc-cluster, and wait for all the components (with the exception of MachineDeployment/capc-cluster-md-0) to be ready. (MachineDeployment/capc-cluster-md-0 will not show ready until the CNI is installed.)

clusterctl describe cluster capc-cluster -

Get the generated kubeconfig for your newly created Apache CloudStack cluster

capc-clusterclusterctl get kubeconfig capc-cluster > capc-cluster.kubeconfig -

Install calico or weave net cni plugin on the workload cluster so that pods can see each other

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yamlor

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/weaveworks/weave/master/prog/weave-kube/weave-daemonset-k8s-1.11.yaml -

Verify the K8s cluster is fully up. (It may take a minute for the nodes status to all reach ready state.)

- Run

KUBECONFIG=capc-cluster.kubeconfig kubectl get nodes, and observe the following output

NAME STATUS ROLES AGE VERSION capc-cluster-control-plane-xsnxt Ready control-plane,master 2m56s v1.20.10 capc-cluster-md-0-9fr9d Ready <none> 112s v1.20.10 - Run

Validating the CAPC Cluster:

Run a simple kubernetes app called ‘test-thing’

- Create the container

KUBECONFIG=capc-cluster.kubeconfig kubectl run test-thing --image=rockylinux/rockylinux:8 --restart=Never -- /bin/bash -c 'echo Hello, World!'

KUBECONFIG=capc-cluster.kubeconfig kubectl get pods

- Wait for the container to complete, and check the logs for ‘Hello, World!’

KUBECONFIG=capc-cluster.kubeconfig kubectl logs test-thing

kubectl/clusterctl Reference:

- Pods in capc-cluster -- cluster running in Apache CloudStack with calico cni

% KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 2m43s

kube-system calico-kube-controllers-784dcb7597-dw42t 1/1 Running 0 4m31s

kube-system calico-node-mmp2x 1/1 Running 0 4m31s

kube-system calico-node-vz99f 1/1 Running 0 4m31s

kube-system coredns-74ff55c5b-n6zp7 1/1 Running 0 9m18s

kube-system coredns-74ff55c5b-r8gvj 1/1 Running 0 9m18s

kube-system etcd-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-apiserver-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-controller-manager-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-proxy-6g9zb 1/1 Running 0 9m3s

kube-system kube-proxy-7gjbv 1/1 Running 0 9m18s

kube-system kube-scheduler-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

- Pods in capc-cluster -- cluster running in Apache CloudStack with weave net cni

%KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 38s

kube-system coredns-5d78c9869d-9xq2s 1/1 Running 0 21h

kube-system coredns-5d78c9869d-gphs2 1/1 Running 0 21h

kube-system etcd-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-apiserver-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-controller-manager-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-proxy-8lfnm 1/1 Running 0 21h

kube-system kube-proxy-brj78 1/1 Running 0 21h

kube-system kube-scheduler-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system weave-net-rqckr 2/2 Running 1 (3h8m ago) 3h8m

kube-system weave-net-rzms4 2/2 Running 1 (3h8m ago) 3h8m

- Pods in original kind cluster (also called bootstrap cluster, management cluster)

% kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

capc-system capc-controller-manager-55798f8594-lp2xs 1/1 Running 0 30m

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-7857cd7bb8-rldnw 1/1 Running 0 30m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-6cc4b4d964-tz5zq 1/1 Running 0 30m

capi-system capi-controller-manager-7cfcfdf99b-79lr9 1/1 Running 0 30m

cert-manager cert-manager-848f547974-dl7hc 1/1 Running 0 31m

cert-manager cert-manager-cainjector-54f4cc6b5-gfgsw 1/1 Running 0 31m

cert-manager cert-manager-webhook-7c9588c76-5m2b2 1/1 Running 0 31m

kube-system coredns-558bd4d5db-22zql 1/1 Running 0 48m

kube-system coredns-558bd4d5db-7g7kh 1/1 Running 0 48m

kube-system etcd-capi-test-control-plane 1/1 Running 0 48m

kube-system kindnet-7p2dq 1/1 Running 0 48m

kube-system kube-apiserver-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-controller-manager-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-proxy-cwrhv 1/1 Running 0 48m

kube-system kube-scheduler-capi-test-control-plane 1/1 Running 0 48m

local-path-storage local-path-provisioner-547f784dff-f2g7r 1/1 Running 0 48m

Topics

- Move From Bootstrap

- TroubleShooting

- Custom Images

- SSH Access To Nodes

- Unstacked etcd

- CloudStack Permissions

TODO :

- Data Disks

- Diff between CKS and CAPC

- E2E Tests

Move From Bootstrap

This documentation describes how to move Cluster API related objects from bootstrap cluster to target cluster.

Check clusterctl move for further information.

Pre-condition

Bootstrap cluster

# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

capc-system capc-controller-manager-5d8b989c5c-zqvcn 1/1 Running 0 23m

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-58db4b5555-crcc8 1/1 Running 0 23m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-86c4dcbc4c-8xvql 1/1 Running 0 23m

capi-system capi-controller-manager-56f77c8f7b-s5q8f 1/1 Running 0 23m

cert-manager cert-manager-848f547974-tzqtg 1/1 Running 0 23m

cert-manager cert-manager-cainjector-54f4cc6b5-hslzq 1/1 Running 0 23m

cert-manager cert-manager-webhook-7c9588c76-pz42g 1/1 Running 0 23m

kube-system coredns-558bd4d5db-2xxlz 1/1 Running 0 34m

kube-system coredns-558bd4d5db-wjdbw 1/1 Running 0 34m

kube-system etcd-kind-control-plane 1/1 Running 0 34m

kube-system kindnet-lkgjb 1/1 Running 0 34m

kube-system kube-apiserver-kind-control-plane 1/1 Running 0 34m

kube-system kube-controller-manager-kind-control-plane 1/1 Running 0 34m

kube-system kube-proxy-gv7pv 1/1 Running 0 34m

kube-system kube-scheduler-kind-control-plane 1/1 Running 0 34m

local-path-storage local-path-provisioner-547f784dff-79kq4 1/1 Running 0 34m

Target cluster

# kubectl get pods --kubeconfig target.kubeconfig --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-784dcb7597-dw42t 1/1 Running 0 41m

kube-system calico-node-mmp2x 1/1 Running 0 41m

kube-system calico-node-vz99f 1/1 Running 0 41m

kube-system coredns-558bd4d5db-5pvfm 1/1 Running 0 43m

kube-system coredns-558bd4d5db-gcv5j 1/1 Running 0 43m

kube-system etcd-target-control-plane 1/1 Running 0 43m

kube-system kindnet-4w84z 1/1 Running 0 43m

kube-system kube-apiserver-target-control-plane 1/1 Running 0 43m

kube-system kube-controller-manager-target-control-plane 1/1 Running 0 43m

kube-system kube-proxy-zstvt 1/1 Running 0 43m

kube-system kube-scheduler-target-control-plane 1/1 Running 0 43m

The bootstrap cluster is currently managing an existing workload cluster

# clusterctl describe cluster cloudstack-capi

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/cloudstack-capi True 9m31s

├─ClusterInfrastructure - CloudStackCluster/cloudstack-capi

├─ControlPlane - KubeadmControlPlane/cloudstack-capi-control-plane True 9m31s

│ └─Machine/cloudstack-capi-control-plane-xhgb9 True 9m51s

│ └─MachineInfrastructure - CloudStackMachine/cloudstack-capi-control-plane-59qrb

└─Workers

└─MachineDeployment/cloudstack-capi-md-0 True 7m20s

└─Machine/cloudstack-capi-md-0-75499bbf6-zqktd True 8m56s

└─MachineInfrastructure - CloudStackMachine/cloudstack-capi-md-0-cl5ht

Install Cloudstack Cluster API provider into target cluster

You need install Apache CloudStack Cluster API providers into target cluster first.

# clusterctl --kubeconfig target.kubeconfig init --infrastructure cloudstack

Fetching providers

Installing cert-manager Version="v1.5.3"

Waiting for cert-manager to be available...

Installing Provider="cluster-api" Version="v1.1.3" TargetNamespace="capi-system"

Installing Provider="bootstrap-kubeadm" Version="v1.1.3" TargetNamespace="capi-kubeadm-bootstrap-system"

Installing Provider="control-plane-kubeadm" Version="v1.1.3" TargetNamespace="capi-kubeadm-control-plane-system"

Installing Provider="infrastructure-cloudstack" Version="v1.0.0" TargetNamespace="capc-system"

Your management cluster has been initialized successfully!

You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -

Move objects from bootstrap cluster into target cluster.

CRD, objects such as CloudstackCluster, CloudstackMachine etc need to be moved.

# clusterctl move --to-kubeconfig target.kubeconfig -v 10

Using configuration File="/home/djumani/.cluster-api/clusterctl.yaml"

Performing move...

Discovering Cluster API objects

Cluster Count=1

KubeadmConfigTemplate Count=1

KubeadmControlPlane Count=1

MachineDeployment Count=1

MachineSet Count=1

CloudStackCluster Count=1

CloudStackMachine Count=2

CloudStackMachineTemplate Count=2

Machine Count=2

KubeadmConfig Count=2

ConfigMap Count=1

Secret Count=8

Total objects Count=23

Excluding secret from move (not linked with any Cluster) name="default-token-nd9nb"

Moving Cluster API objects Clusters=1

Moving Cluster API objects ClusterClasses=0

Pausing the source cluster

Set Cluster.Spec.Paused Paused=true Cluster="cloudstack-capi" Namespace="default"

Pausing the source cluster classes

Creating target namespaces, if missing

Creating objects in the target cluster

Creating Cluster="cloudstack-capi" Namespace="default"

Creating CloudStackMachineTemplate="cloudstack-capi-md-0" Namespace="default"

Creating KubeadmControlPlane="cloudstack-capi-control-plane" Namespace="default"

Creating CloudStackCluster="cloudstack-capi" Namespace="default"

Creating KubeadmConfigTemplate="cloudstack-capi-md-0" Namespace="default"

Creating MachineDeployment="cloudstack-capi-md-0" Namespace="default"

Creating CloudStackMachineTemplate="cloudstack-capi-control-plane" Namespace="default"

Creating Secret="cloudstack-capi-proxy" Namespace="default"

Creating Machine="cloudstack-capi-control-plane-xhgb9" Namespace="default"

Creating Secret="cloudstack-capi-ca" Namespace="default"

Creating Secret="cloudstack-capi-etcd" Namespace="default"

Creating MachineSet="cloudstack-capi-md-0-75499bbf6" Namespace="default"

Creating Secret="cloudstack-capi-kubeconfig" Namespace="default"

Creating Secret="cloudstack-capi-sa" Namespace="default"

Creating Machine="cloudstack-capi-md-0-75499bbf6-zqktd" Namespace="default"

Creating CloudStackMachine="cloudstack-capi-control-plane-59qrb" Namespace="default"

Creating KubeadmConfig="cloudstack-capi-control-plane-r6ns8" Namespace="default"

Creating KubeadmConfig="cloudstack-capi-md-0-z9ndx" Namespace="default"

Creating Secret="cloudstack-capi-control-plane-r6ns8" Namespace="default"

Creating CloudStackMachine="cloudstack-capi-md-0-cl5ht" Namespace="default"

Creating Secret="cloudstack-capi-md-0-z9ndx" Namespace="default"

Deleting objects from the source cluster

Deleting Secret="cloudstack-capi-md-0-z9ndx" Namespace="default"

Deleting KubeadmConfig="cloudstack-capi-md-0-z9ndx" Namespace="default"

Deleting Secret="cloudstack-capi-control-plane-r6ns8" Namespace="default"

Deleting CloudStackMachine="cloudstack-capi-md-0-cl5ht" Namespace="default"

Deleting Machine="cloudstack-capi-md-0-75499bbf6-zqktd" Namespace="default"

Deleting CloudStackMachine="cloudstack-capi-control-plane-59qrb" Namespace="default"

Deleting KubeadmConfig="cloudstack-capi-control-plane-r6ns8" Namespace="default"

Deleting Secret="cloudstack-capi-proxy" Namespace="default"

Deleting Machine="cloudstack-capi-control-plane-xhgb9" Namespace="default"

Deleting Secret="cloudstack-capi-ca" Namespace="default"

Deleting Secret="cloudstack-capi-etcd" Namespace="default"

Deleting MachineSet="cloudstack-capi-md-0-75499bbf6" Namespace="default"

Deleting Secret="cloudstack-capi-kubeconfig" Namespace="default"

Deleting Secret="cloudstack-capi-sa" Namespace="default"

Deleting CloudStackMachineTemplate="cloudstack-capi-md-0" Namespace="default"

Deleting KubeadmControlPlane="cloudstack-capi-control-plane" Namespace="default"

Deleting CloudStackCluster="cloudstack-capi" Namespace="default"

Deleting KubeadmConfigTemplate="cloudstack-capi-md-0" Namespace="default"

Deleting MachineDeployment="cloudstack-capi-md-0" Namespace="default"

Deleting CloudStackMachineTemplate="cloudstack-capi-control-plane" Namespace="default"

Deleting Cluster="cloudstack-capi" Namespace="default"

Resuming the target cluter classes

Resuming the target cluster

Set Cluster.Spec.Paused Paused=false Cluster="cloudstack-capi" Namespace="default"

Check cluster status

# clusterctl --kubeconfig target.kubeconfig describe cluster cloudstack-capi

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/cloudstack-capi True 107s

├─ClusterInfrastructure - CloudStackCluster/cloudstack-capi

├─ControlPlane - KubeadmControlPlane/cloudstack-capi-control-plane True 107s

│ └─Machine/cloudstack-capi-control-plane-xhgb9 True 115s

│ └─MachineInfrastructure - CloudStackMachine/cloudstack-capi-control-plane-59qrb

└─Workers

└─MachineDeployment/cloudstack-capi-md-0 True 115s

└─Machine/cloudstack-capi-md-0-75499bbf6-zqktd True 115s

└─MachineInfrastructure - CloudStackMachine/cloudstack-capi-md-0-cl5ht

# kubectl get cloudstackcluster --kubeconfig target.kubeconfig --all-namespaces

NAMESPACE NAME CLUSTER READY NETWORK

default cloudstack-capi cloudstack-capi true

# kubectl get cloudstackmachines --kubeconfig target.kubeconfig --all-namespaces

NAMESPACE NAME CLUSTER INSTANCESTATE READY PROVIDERID MACHINE

default cloudstack-capi-control-plane-59qrb cloudstack-capi true cloudstack:///81b9d65e-b365-4535-956f-9f845b730c54 cloudstack-capi-control-plane-xhgb9

default cloudstack-capi-md-0-cl5ht cloudstack-capi true cloudstack:///a05408b1-47fe-46b5-b128-a9d5492eaabf cloudstack-capi-md-0-75499bbf6-zqktd

Troubleshooting

This guide (based on kind but others should be similar) explains general info on how to debug issues if a cluster creation fails.

Get logs of Cluster API controller containers

kubectl -n capc-system logs -l control-plane=capc-controller-manager -c manager

Similarly, the logs of the other controllers in the namespaces capi-system and cabpk-system can be retrieved.

Authenticaton Error

This is caused when the API Key and / or the Signature is invalid. Please check them in the Accounts > User > API Key section of the UI or via the getUserKeys API

E0325 04:30:51.030540 1 controller.go:317] controller/cloudstackcluster "msg"="Reconciler error" "error"="CloudStack API error 401 (CSExceptionErrorCode: 0): unable to verify user credentials and/or request signature" "name"="kvm-capi" "namespace"="default" "reconciler group"="infrastructure.cluster.x-k8s.io" "reconciler kind"="CloudStackCluster"

Cluster reconciliation failed with error: No match found for xxxx: {Count:0 yyyy:[]}

This is caused when resource ‘yyyy’ with the name ‘xxxx’ does not exist on the CloudStack instance

E0325 04:12:44.047381 1 controller.go:317] controller/cloudstackcluster "msg"="Reconciler error" "error"="No match found for zone-1: \u0026{Count:0 Zones:[]}" "name"="kvm-capi" "namespace"="default" "reconciler group"="infrastructure.cluster.x-k8s.io" "reconciler kind"="CloudStackCluster"

In such a case, check the spelling of the resource name or create it in CloudStack. Following which, update it in the workload cluster yaml (in cases where the resource name is not immutable) or delete the capi resource and re-create it with the updated name

Custom Images

This document will help you get a CAPC Kubernetes cluster up and running with your custom image.

Prebuilt Images

An image defines the operating system and Kubernetes components that will populate the disk of each node in your cluster.

As of now, prebuilt images for KVM, VMware and XenServer are available here

Building a custom image

Cluster API uses the Kubernetes Image Builder tools. You should use the QEMU images from that project as a starting point for your custom image.

The Image Builder Book explains how to build the images defined in that repository, with instructions for CloudStack CAPI Images in particular.

The image is built using KVM hypervisor as a qcow2 image.

Depending on they hypervisor requirements, it can then converted into ova for VMware and vhd for XenServer via the convert-cloudstack-image.sh script.

Operating system requirements

For your custom image to work with Cluster API, it must meet the operating system requirements of the bootstrap provider. For example, the default kubeadm bootstrap provider has a set of preflight checks that a VM is expected to pass before it can join the cluster.

Kubernetes version requirements

The reference images are each built to support a specific version of Kubernetes. When using your custom images based on them, take care to match the image to the version: field of the KubeadmControlPlane and MachineDeployment in the YAML template for your workload cluster.

Creating a cluster from a custom image

To use a custom image, it needs to be referenced in an image: section of your CloudStackMachineTemplate.

Be sure to also update the version in the KubeadmControlPlane and MachineDeployment cluster spec.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: CloudStackMachineTemplate

metadata:

name: capi-quickstart-control-plane

spec:

template:

spec:

offering: ControlPlaneOffering

template: custom-image-name

Upgrading Kubernetes Versions

To upgrade to a new Kubernetes release with custom images requires this preparation:

- Create a new custom image which supports the Kubernetes release version

- Register the custom image as a template in Apache CloudStack

- Copy the existing

CloudStackMachineTemplateand change itsimage:section to reference the new custom image - Create the new

CloudStackMachineTemplateon the management cluster - Modify the existing

KubeadmControlPlaneandMachineDeploymentto reference the newCloudStackMachineTemplateand update theversion:field to match

See Upgrading workload clusters for more details.

SSH Access To Nodes

This guide will detail the steps to access the CAPC nodes.

Please note that this will only work on Isolated Networks. To access Nodes on shared networks, please configure the routes on the external network’s management plane

Prerequisites

The user must either know the password of the node or have the respective SSH private key matching the public key in the authorized_keys file in the node

To see how to pass a key pair to the node, checkout the keypair configuration

Configure Network Access

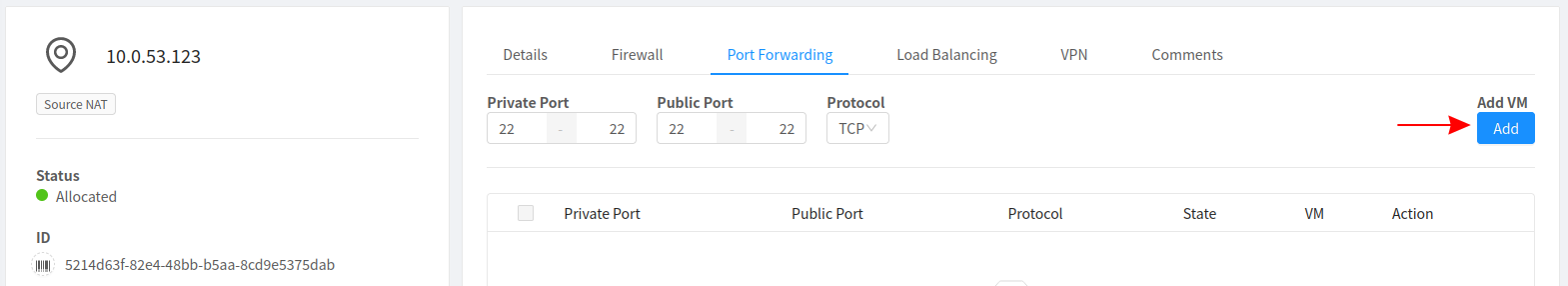

In order to access the nodes, the following changes need to be made in Apache CloudStack

-

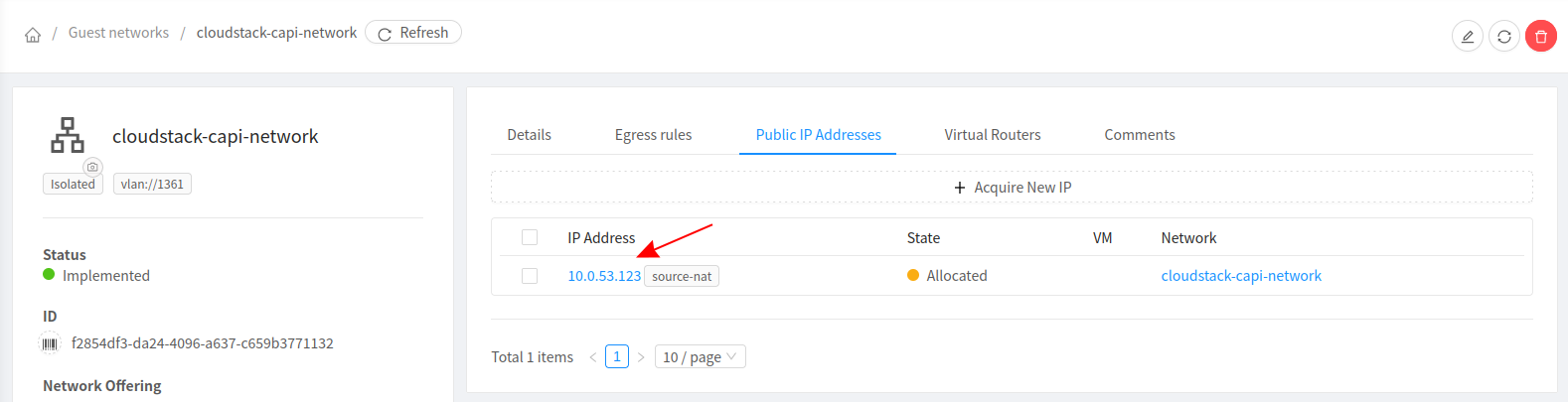

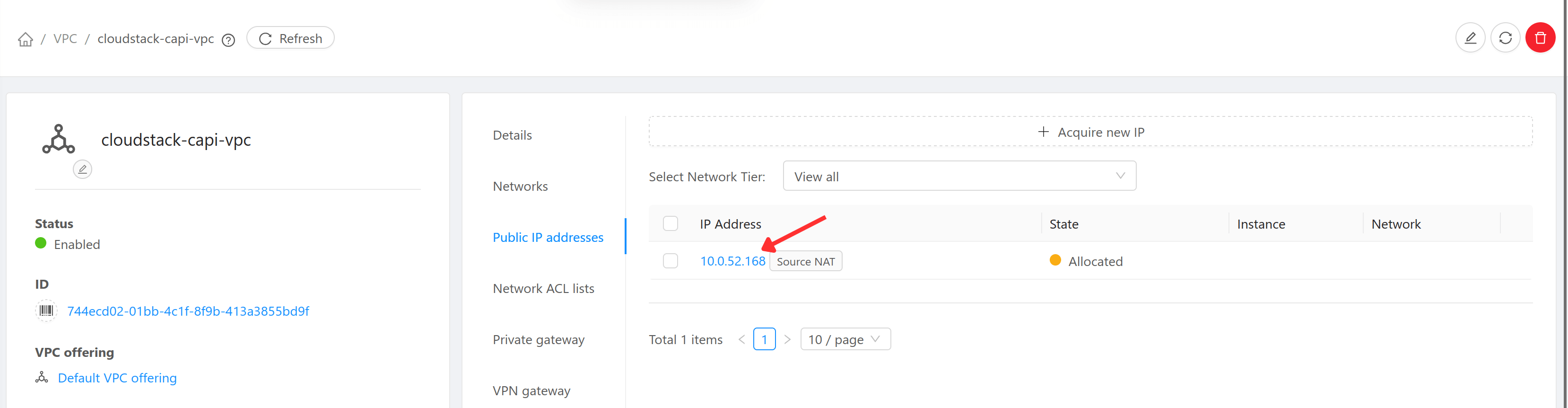

Select the Public IP belonging to the network via which users can access the VM. Either use the exiting IP or acquire a new IP. If the network is part of a VPC, the public IP will be the one assigned to the VPC.

-

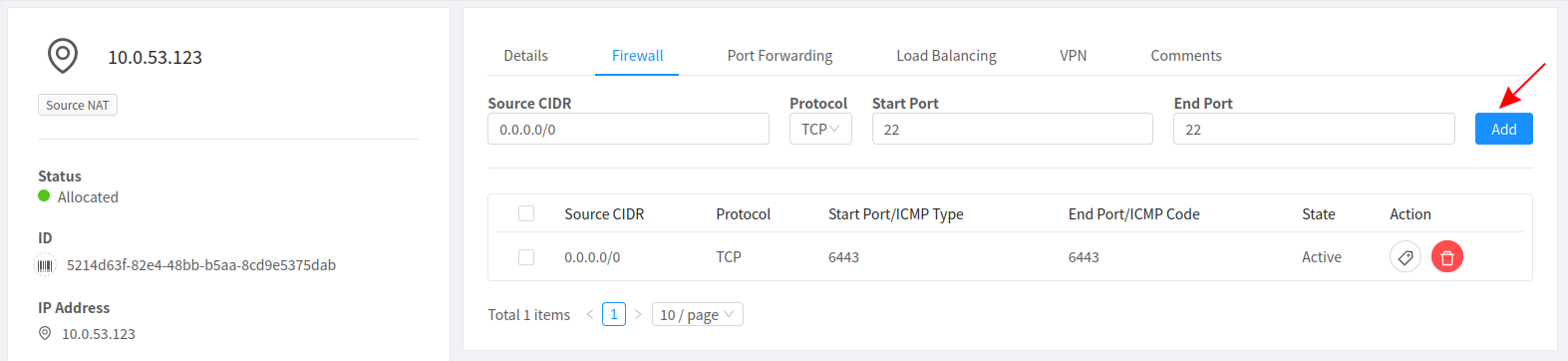

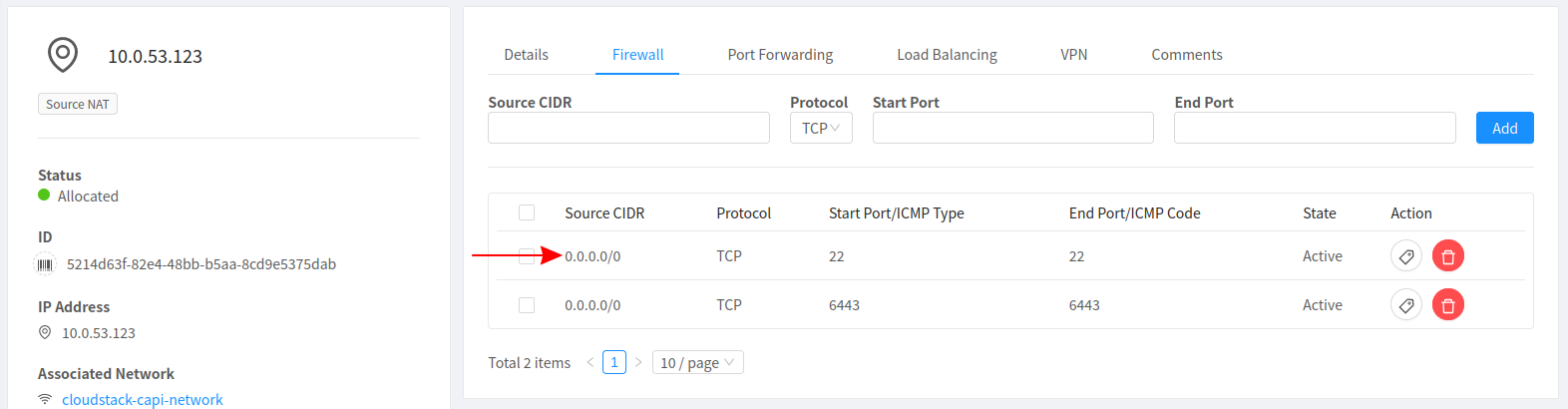

Add a firewall rule to allow access on the desired port

-

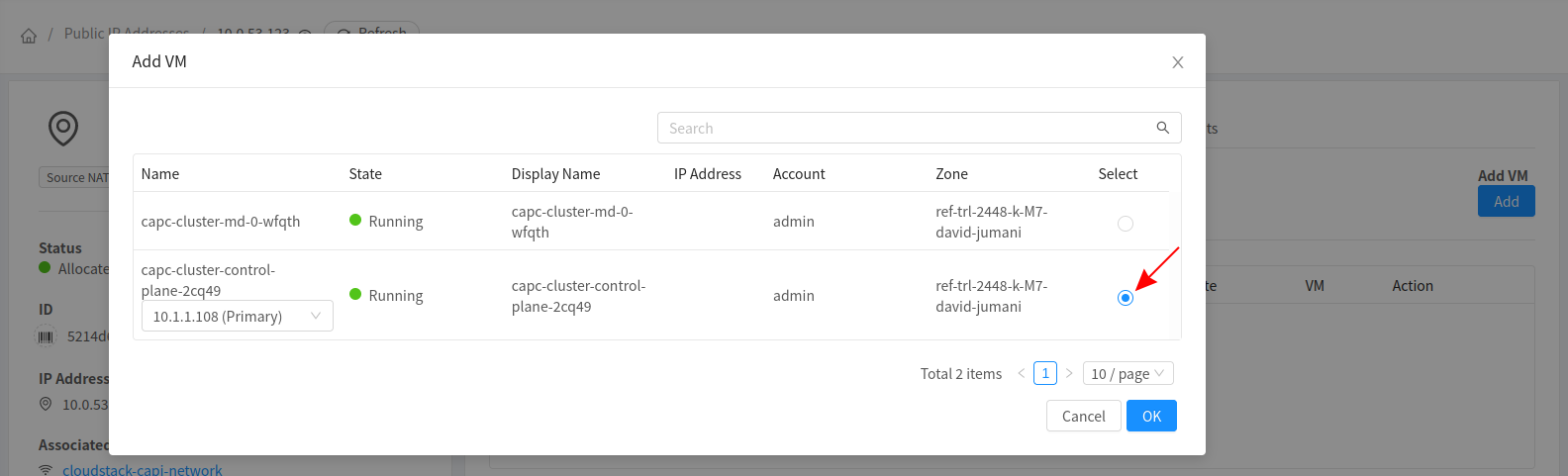

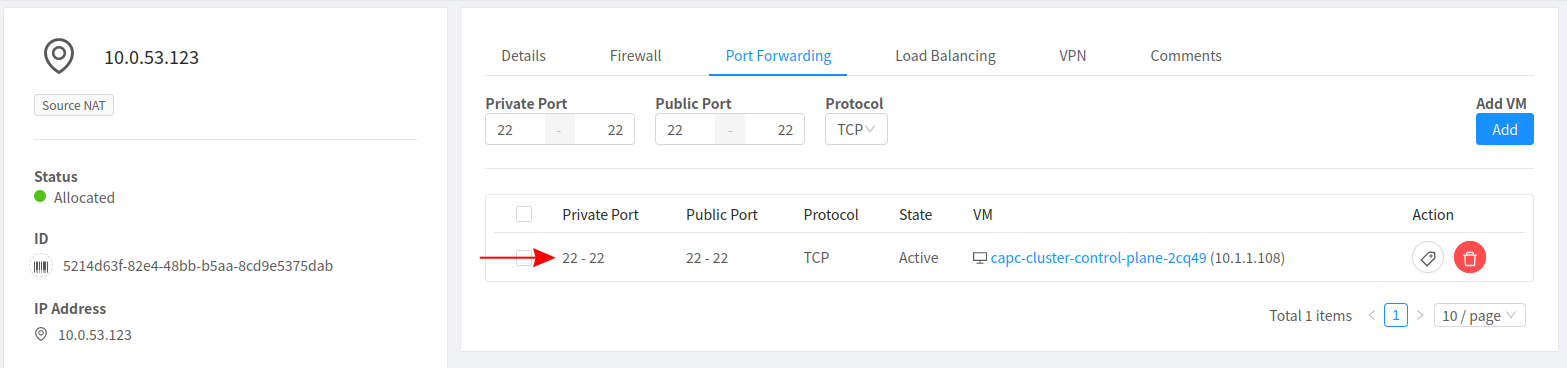

Add a port forwarding rule with the corresponding port to the firewall rule that was just created

Select the VM to which you would like SSH Access

SSH Into The Node

Now access the node via the Public IP using the corresponding SSH Keypair. The username is ubuntu for ubuntu images and cloud-user for rockylinux8 images.

$ ssh ubuntu@10.0.53.123 -i path/to/key

Welcome to Ubuntu 20.04.3 LTS (GNU/Linux 5.4.0-97-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

To run a command as administrator (user "root"), use "sudo <command>".

See "man sudo_root" for details.

ubuntu@capc-cluster-control-plane-2cq49:~$

Unstacked etcd

There are two types of etcd topologies for configuring a Kubernetes cluster:

- Stacked: The etcd members and control plane components are co-located (run on the same node/machines)

- Unstacked/External: With the unstacked or external etcd topology, etcd members have dedicated machines and are not co-located with control plane components

The unstacked etcd topology is recommended for a HA cluster for the following reasons:

- External etcd topology decouples the control plane components and etcd member. So if a control plane-only node fails, or if there is a memory leak in a component like kube-apiserver, it won’t directly impact an etcd member.

- Etcd is resource intensive, so it is safer to have dedicated nodes for etcd, since it could use more disk space or higher bandwidth. Having a separate etcd cluster for these reasons could ensure a more resilient HA setup.

More details can be found here

Local storage

In this configuration, the storage used by etcd is a local directory on the node, rather than within the container itself. This provides resilience in case the etcd pod is terminated as no data is lost, and can be read from the local directory once the new etcd pod comes up

Local storage for etcd can be configured by adding the etcd field to the KubeadmControlPlane.spec.kubeadmConfigSpec.clusterConfiguration spec.

The value should point to an empty directory on the node

kubeadmConfigSpec:

clusterConfiguration:

imageRepository: registry.k8s.io

etcd:

local:

dataDir: /var/lib/etcddisk/etcd

If the user wishes to use a separate data disk as local storage, the can be formatted and mounted as shown :

kubeadmConfigSpec:

diskSetup:

filesystems:

- device: /dev/vdb

filesystem: ext4

label: etcd_disk

mounts:

- - LABEL=etcd_disk

- /var/lib/etcddisk

External etcd

In this configuration, etcd does not run on the Kubernetes Cluster. Instead, the Kubernetes Cluster uses an externally managed etcd cluster. This provides additional availability if the entire control plane node goes down, the caveat being that the externally managed etcd cluster must be always available.

External etcd can be configured by adding the etcd field to the KubeadmControlPlane.spec.kubeadmConfigSpec.clusterConfiguration spec.

The value should point to an empty directory on the node

kubeadmConfigSpec:

clusterConfiguration:

imageRepository: registry.k8s.io

etcd:

external:

endpoints:

- ${ETCD_ENDPOINT}

caFile: /etc/kubernetes/pki/etcd/ca.crt

certFile: /etc/kubernetes/pki/apiserver-etcd-client.crt

keyFile: /etc/kubernetes/pki/apiserver-etcd-client.key

Additionally, the certificates have to be passed as Secrets as shown below :

# Ref: https://github.com/kubernetes-retired/cluster-api-bootstrap-provider-kubeadm/blob/master/docs/external-etcd.md

kind: Secret

apiVersion: v1

metadata:

name: ${CLUSTER_NAME}-apiserver-etcd-client

data:

# base64 encoded /etc/etcd/pki/apiserver-etcd-client.crt

tls.crt: |

${BASE64_ENCODED__APISERVER_ETCD_CLIENT_CRT}

# base64 encoded /etc/etcd/pki/apiserver-etcd-client.key

tls.key: |

${BASE64_ENCODED__APISERVER_ETCD_CLIENT_KEY}

CloudStack Permissions for CAPC

The account that CAPC runs under must minimally be a User type account with a role offering the following permissions

- assignToLoadBalancerRule

- associateIpAddress

- createAffinityGroup

- createEgressFirewallRule

- createLoadBalancerRule

- createNetwork

- createTags

- deleteAffinityGroup

- deleteNetwork

- deleteTags

- deployVirtualMachine

- destroyVirtualMachine

- disassociateIpAddress

- getUserKeys

- listAccounts

- listAffinityGroups

- listDiskOfferings

- listLoadBalancerRuleInstances

- listLoadBalancerRules

- listNetworkOfferings

- listNetworks

- listPublicIpAddresses

- listServiceOfferings

- listSSHKeyPairs

- listTags

- listTemplates

- listUsers

- listVirtualMachines

- listVirtualMachinesMetrics

- listVolumes

- listZones

- queryAsyncJobResult

- startVirtualMachine

- stopVirtualMachine

- updateVMAffinityGroup

Note: If the user doesn’t have permissions to expunge the VM, it will be left in a destroyed state. The user will need to manually expunge the VM.

This permission set has been verified to successfully run the CAPC E2E test suite (Oct 11, 2022).

Developer Guide

Initial setup for development environment

Prerequisites

Please install the following tools :

- go

- Get the latest patch version for go v1.17.

- kind

GO111MODULE="on" go get sigs.k8s.io/kind@v0.12.0.

- kustomize

- envsubst

- make

Get the source

Fork the cluster-api-provider-cloudstack repo:

cd "$(go env GOPATH)"/src

mkdir sigs.k8s.io

cd sigs.k8s.io/

git clone git@github.com:<GITHUB USERNAME>/cluster-api-provider-cloudstack.git

cd cluster-api-provider-cloudstack

git remote add upstream git@github.com:kubernetes-sigs/cluster-api-provider-cloudstack.git

git fetch upstream

Setup the CloudStack Environment

-

Set up Apache CloudStack credentials

-

Create a file named

cloud-configin the repo’s root directory, substituting in your own environment’s values[Global] api-url = <cloudstackApiUrl> api-key = <cloudstackApiKey> secret-key = <cloudstackSecretKey> -

Run the following command to save the above Apache CloudStack connection info into an environment variable, to be used by clusterctl, where it gets passed to CAPC:

export CLOUDSTACK_B64ENCODED_SECRET=$(base64 -w0 -i cloud-config)

-

-

Register the capi-compatible templates in your Apache CloudStack installation.

- Prebuilt images can be found here

- To build a compatible image see CloudStack CAPI Images

Running local management cluster for development

Before the next steps, make sure initial setup for development environment steps are complete.

There are two ways to build Apache CloudStack manager from local cluster-api-provider-cloudstack source and run it in local kind cluster:

Option 1: Setting up Development Environment with Tilt

Tilt is a tool for quickly building, pushing, and reloading Docker containers as part of a Kubernetes deployment. Many of the Cluster API engineers use it for quick iteration. Please see our Tilt instructions to get started.

Option 2: The Old-fashioned way

Running cluster-api and cluster-api-provider-cloudstack controllers in a kind cluster:

- Create a local kind cluster

kind create cluster

- Install core cluster-api controllers (the version must match the cluster-api version in go.mod)

clusterctl init

- Release manifests under

./outdirectoryRELEASE_TAG="e2e" make release-manifests

- Apply the manifests

kubectl apply -f ./out/infrastructure.yaml

Developing Cluster API Provider CloudStack with Tilt

This document describes how to use kind and Tilt for a simplified workflow that offers easy deployments and rapid iterative builds.

Before the next steps, make sure initial setup for development environment steps are complete.

Also, visit the Cluster API documentation on Tilt for more information on how to set up your development environment.

Create a kind cluster

First, make sure you have a kind cluster and that your KUBECONFIG is set up correctly:

kind create cluster

This local cluster will be running all the cluster api controllers and become the management cluster which then can be used to spin up workload clusters on Apache CloudStack.

Get the source

Get the source for core cluster-api for development with Tilt along with cluster-api-provider-cloudstack.

cd "$(go env GOPATH)"

mkdir sigs.k8s.io

cd sigs.k8s.io/

git clone git@github.com:kubernetes-sigs/cluster-api.git

cd cluster-api

git fetch upstream

Create a tilt-settings.json file

Next, create a tilt-settings.json file and place it in your local copy of cluster-api. Here is an example:

Example tilt-settings.json for CAPC clusters:

{

"default_registry": "gcr.io/your-project-name-here",

"provider_repos": ["../cluster-api-provider-cloudstack"],

"enable_providers": ["kubeadm-bootstrap", "kubeadm-control-plane", "cloudstack"]

}

Example tilt-settings.json for CAPC clusters with experimental feature gate:

{

"default_registry": "gcr.io/your-project-name-here",

"provider_repos": ["../cluster-api-provider-cloudstack"],

"enable_providers": ["kubeadm-bootstrap", "kubeadm-control-plane", "cloudstack"],

"kustomize_substitutions": {

"EXP_KUBEADM_BOOTSTRAP_FORMAT_IGNITION": "true",

}

}

Debugging

If you would like to debug CAPC (or core CAPI / another provider) you can run the provider with delve. This will then allow you to attach to delve and debug.

To do this you need to use the debug configuration in tilt-settings.json. Full details of the options can be seen here.

An example tilt-settings.json:

{

"default_registry": "gcr.io/your-project-name-here",

"provider_repos": ["../cluster-api-provider-cloudstack"],

"enable_providers": ["kubeadm-bootstrap", "kubeadm-control-plane", "cloudstack"],

"kustomize_substitutions": {

"CLOUDSTACK_B64ENCODED_CREDENTIALS": "RANDOM_STRING==",

},

"debug": {

"cloudstack": {

"continue": true,

"port": 30000

}

}

}

Once you have run tilt (see section below) you will be able to connect to the running instance of delve.

For vscode, you can use the a launch configuration like this:

{

"name": "Connect to CAPC",

"type": "go",

"request": "attach",

"mode": "remote",

"remotePath": "",

"port": 30000,

"host": "127.0.0.1",

"showLog": true,

"trace": "log",

"logOutput": "rpc"

}

For GoLand/IntelliJ add a new run configuration following these instructions.

Or you could use delve directly from the CLI using a command similar to this:

dlv-dap connect 127.0.0.1:3000

Run Tilt!

To launch your development environment, run:

tilt up

kind cluster becomes a management cluster after this point, check the pods running on the kind cluster kubectl get pods -A.

Create Workload Cluster

Creating a CAPC Cluster:

-

Set up the environment variables. It will be populated by the values set here. See the example values below (and replace with your own!)

The entire list of configuration variables as well as how to fetch them can be found here

# The Apache CloudStack zone in which the cluster is to be deployed export CLOUDSTACK_ZONE_NAME=zone1 # If the referenced network doesn't exist, a new isolated network # will be created. export CLOUDSTACK_NETWORK_NAME=GuestNet1 # The IP you put here must be available as an unused public IP on the network # referenced above. If it's not available, the control plane will fail to create. # You can see the list of available IP's when you try allocating a public # IP in the network at # Network -> Guest Networks -> <Network Name> -> IP Addresses export CLUSTER_ENDPOINT_IP=192.168.1.161 # This is the standard port that the Control Plane process runs on export CLUSTER_ENDPOINT_PORT=6443 # Machine offerings must be pre-created. Control plane offering # must have have >2GB RAM available export CLOUDSTACK_CONTROL_PLANE_MACHINE_OFFERING="Large Instance" export CLOUDSTACK_WORKER_MACHINE_OFFERING="Small Instance" # Referring to a prerequisite capi-compatible image you've loaded into Apache CloudStack export CLOUDSTACK_TEMPLATE_NAME=kube-v1.23.3/ubuntu-2004 # The SSH KeyPair to log into the VM (Optional: you must use clusterctl --flavor *managed-ssh*) export CLOUDSTACK_SSH_KEY_NAME=CAPCKeyPair6 # Sync resources created by CAPC in Apache Cloudstack CKS. Default is false. # Requires setting CAPC_CLOUDSTACKMACHINE_CKS_SYNC=true before initialising the cloudstack provider. # Or set enable-cloudstack-cks-sync to true in the deployment for capc-controller. export CLOUDSTACK_SYNC_WITH_ACS=true -

Generate the CAPC cluster spec yaml file

clusterctl generate cluster capc-cluster \ --kubernetes-version v1.23.3 \ --control-plane-machine-count=1 \ --worker-machine-count=1 \ > capc-cluster-spec.yaml -

Apply the CAPC cluster spec to your kind management cluster

kubectl apply -f capc-cluster-spec.yaml -

Check the progress of capc-cluster, and wait for all the components (with the exception of MachineDeployment/capc-cluster-md-0) to be ready. (MachineDeployment/capc-cluster-md-0 will not show ready until the CNI is installed.)

clusterctl describe cluster capc-cluster -

Get the generated kubeconfig for your newly created Apache CloudStack cluster

capc-clusterclusterctl get kubeconfig capc-cluster > capc-cluster.kubeconfig -

Install calico or weave net cni plugin on the workload cluster so that pods can see each other

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yamlor

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/weaveworks/weave/master/prog/weave-kube/weave-daemonset-k8s-1.11.yaml -

Verify the K8s cluster is fully up. (It may take a minute for the nodes status to all reach ready state.)

- Run

KUBECONFIG=capc-cluster.kubeconfig kubectl get nodes, and observe the following output

NAME STATUS ROLES AGE VERSION capc-cluster-control-plane-xsnxt Ready control-plane,master 2m56s v1.20.10 capc-cluster-md-0-9fr9d Ready <none> 112s v1.20.10 - Run

Validating the CAPC Cluster:

Run a simple kubernetes app called ‘test-thing’

- Create the container

KUBECONFIG=capc-cluster.kubeconfig kubectl run test-thing --image=rockylinux/rockylinux:8 --restart=Never -- /bin/bash -c 'echo Hello, World!'

KUBECONFIG=capc-cluster.kubeconfig kubectl get pods

- Wait for the container to complete, and check the logs for ‘Hello, World!’

KUBECONFIG=capc-cluster.kubeconfig kubectl logs test-thing

kubectl/clusterctl Reference:

- Pods in capc-cluster -- cluster running in Apache CloudStack with calico cni

% KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 2m43s

kube-system calico-kube-controllers-784dcb7597-dw42t 1/1 Running 0 4m31s

kube-system calico-node-mmp2x 1/1 Running 0 4m31s

kube-system calico-node-vz99f 1/1 Running 0 4m31s

kube-system coredns-74ff55c5b-n6zp7 1/1 Running 0 9m18s

kube-system coredns-74ff55c5b-r8gvj 1/1 Running 0 9m18s

kube-system etcd-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-apiserver-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-controller-manager-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-proxy-6g9zb 1/1 Running 0 9m3s

kube-system kube-proxy-7gjbv 1/1 Running 0 9m18s

kube-system kube-scheduler-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

- Pods in capc-cluster -- cluster running in Apache CloudStack with weave net cni

%KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 38s

kube-system coredns-5d78c9869d-9xq2s 1/1 Running 0 21h

kube-system coredns-5d78c9869d-gphs2 1/1 Running 0 21h

kube-system etcd-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-apiserver-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-controller-manager-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-proxy-8lfnm 1/1 Running 0 21h

kube-system kube-proxy-brj78 1/1 Running 0 21h

kube-system kube-scheduler-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system weave-net-rqckr 2/2 Running 1 (3h8m ago) 3h8m

kube-system weave-net-rzms4 2/2 Running 1 (3h8m ago) 3h8m

- Pods in original kind cluster (also called bootstrap cluster, management cluster)

% kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

capc-system capc-controller-manager-55798f8594-lp2xs 1/1 Running 0 30m

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-7857cd7bb8-rldnw 1/1 Running 0 30m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-6cc4b4d964-tz5zq 1/1 Running 0 30m

capi-system capi-controller-manager-7cfcfdf99b-79lr9 1/1 Running 0 30m

cert-manager cert-manager-848f547974-dl7hc 1/1 Running 0 31m

cert-manager cert-manager-cainjector-54f4cc6b5-gfgsw 1/1 Running 0 31m

cert-manager cert-manager-webhook-7c9588c76-5m2b2 1/1 Running 0 31m

kube-system coredns-558bd4d5db-22zql 1/1 Running 0 48m

kube-system coredns-558bd4d5db-7g7kh 1/1 Running 0 48m

kube-system etcd-capi-test-control-plane 1/1 Running 0 48m

kube-system kindnet-7p2dq 1/1 Running 0 48m

kube-system kube-apiserver-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-controller-manager-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-proxy-cwrhv 1/1 Running 0 48m

kube-system kube-scheduler-capi-test-control-plane 1/1 Running 0 48m

local-path-storage local-path-provisioner-547f784dff-f2g7r 1/1 Running 0 48m

Building Cluster API Provider for CloudStack

Prerequisites:

-

Follow the instructions here to install the following tools:

- docker

- kind

- kubectl

- clusterctl (Requires v1.1.5 +)

-

Create a local docker registry to save your docker image - otherwise, you need an image registry to push it somewhere else.

-

Download this script into your local and run it. This script will create a kind cluster and configure it to use local docker registry:

wget https://raw.githubusercontent.com/kubernetes-sigs/cluster-api/main/hack/kind-install-for-capd.sh chmod +x ./kind-install-for-capd.sh ./kind-install-for-capd.sh -

Set up Apache CloudStack credentials

-

Create a file named

cloud-configin the repo’s root directory, substituting in your own environment’s values[Global] api-url = <cloudstackApiUrl> api-key = <cloudstackApiKey> secret-key = <cloudstackSecretKey> -

Run the following command to save the above Apache CloudStack connection info into an environment variable, to be used by

./config/default/credentials.yamland ultimately the generatedinfrastructure-components.yaml, where it gets passed to CAPC:export CLOUDSTACK_B64ENCODED_SECRET=$(base64 -w0 -i cloud-config)

-

-

Set the IMG environment variable so that the Makefile knows where to push docker image (if building your own)

export IMG=localhost:5000/cluster-api-provider-capcmake docker-buildmake docker-push

-

Set the source image location so that the CAPC deployment manifest files have the right image path in them in

config/default/manager_image_patch.yaml -

Generate the CAPC manifests (if building your own) into

$RELEASE_DIRmake buildwill generate and copyinfrastructure-components.yamland metadata.yaml files to$RELEASE_DIR, which is./outby default. You may want to override the default value withexport RELEASE_DIR=${HOME}/.cluster-api/overrides/infrastructure-cloudstack/<VERSION>/to deploy the generated manifests for use by clusterctl before runningmake build. -

Generate clusterctl config file so that clusterctl knows how to provision the Apache CloudStack cluster, referencing whatever you set for

$RELEASE_DIRfrom above for the url:cat << EOF > ~/.cluster-api/cloudstack.yaml providers: - name: "cloudstack" type: "InfrastructureProvider" url: ${HOME}/.cluster-api/overrides/infrastructure-cloudstack/<VERSION>/infrastructure-components.yaml EOF -

Assure that the required Apache CloudStack resources have been created: zone, pod cluster, and k8s-compatible template, compute offerings defined (2GB+ of RAM for control plane offering with 2vCPU).

Deploying Custom Builds

Initialize the management cluster

Run the following command to turn your cluster into a management cluster and load the Apache CloudStack components into it.

clusterctl init --infrastructure cloudstack --config ~/.cluster-api/cloudstack.yaml

Creating a CAPC Cluster:

-

Set up the environment variables. It will be populated by the values set here. See the example values below (and replace with your own!)

The entire list of configuration variables as well as how to fetch them can be found here

# The Apache CloudStack zone in which the cluster is to be deployed export CLOUDSTACK_ZONE_NAME=zone1 # If the referenced network doesn't exist, a new isolated network # will be created. export CLOUDSTACK_NETWORK_NAME=GuestNet1 # The IP you put here must be available as an unused public IP on the network # referenced above. If it's not available, the control plane will fail to create. # You can see the list of available IP's when you try allocating a public # IP in the network at # Network -> Guest Networks -> <Network Name> -> IP Addresses export CLUSTER_ENDPOINT_IP=192.168.1.161 # This is the standard port that the Control Plane process runs on export CLUSTER_ENDPOINT_PORT=6443 # Machine offerings must be pre-created. Control plane offering # must have have >2GB RAM available export CLOUDSTACK_CONTROL_PLANE_MACHINE_OFFERING="Large Instance" export CLOUDSTACK_WORKER_MACHINE_OFFERING="Small Instance" # Referring to a prerequisite capi-compatible image you've loaded into Apache CloudStack export CLOUDSTACK_TEMPLATE_NAME=kube-v1.23.3/ubuntu-2004 # The SSH KeyPair to log into the VM (Optional: you must use clusterctl --flavor *managed-ssh*) export CLOUDSTACK_SSH_KEY_NAME=CAPCKeyPair6 # Sync resources created by CAPC in Apache Cloudstack CKS. Default is false. # Requires setting CAPC_CLOUDSTACKMACHINE_CKS_SYNC=true before initialising the cloudstack provider. # Or set enable-cloudstack-cks-sync to true in the deployment for capc-controller. export CLOUDSTACK_SYNC_WITH_ACS=true -

Generate the CAPC cluster spec yaml file

clusterctl generate cluster capc-cluster \ --kubernetes-version v1.23.3 \ --control-plane-machine-count=1 \ --worker-machine-count=1 \ > capc-cluster-spec.yaml -

Apply the CAPC cluster spec to your kind management cluster

kubectl apply -f capc-cluster-spec.yaml -

Check the progress of capc-cluster, and wait for all the components (with the exception of MachineDeployment/capc-cluster-md-0) to be ready. (MachineDeployment/capc-cluster-md-0 will not show ready until the CNI is installed.)

clusterctl describe cluster capc-cluster -

Get the generated kubeconfig for your newly created Apache CloudStack cluster

capc-clusterclusterctl get kubeconfig capc-cluster > capc-cluster.kubeconfig -

Install calico or weave net cni plugin on the workload cluster so that pods can see each other

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/master/manifests/calico.yamlor

KUBECONFIG=capc-cluster.kubeconfig kubectl apply -f https://raw.githubusercontent.com/weaveworks/weave/master/prog/weave-kube/weave-daemonset-k8s-1.11.yaml -

Verify the K8s cluster is fully up. (It may take a minute for the nodes status to all reach ready state.)

- Run

KUBECONFIG=capc-cluster.kubeconfig kubectl get nodes, and observe the following output

NAME STATUS ROLES AGE VERSION capc-cluster-control-plane-xsnxt Ready control-plane,master 2m56s v1.20.10 capc-cluster-md-0-9fr9d Ready <none> 112s v1.20.10 - Run

Validating the CAPC Cluster:

Run a simple kubernetes app called ‘test-thing’

- Create the container

KUBECONFIG=capc-cluster.kubeconfig kubectl run test-thing --image=rockylinux/rockylinux:8 --restart=Never -- /bin/bash -c 'echo Hello, World!'

KUBECONFIG=capc-cluster.kubeconfig kubectl get pods

- Wait for the container to complete, and check the logs for ‘Hello, World!’

KUBECONFIG=capc-cluster.kubeconfig kubectl logs test-thing

kubectl/clusterctl Reference:

- Pods in capc-cluster -- cluster running in Apache CloudStack with calico cni

% KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 2m43s

kube-system calico-kube-controllers-784dcb7597-dw42t 1/1 Running 0 4m31s

kube-system calico-node-mmp2x 1/1 Running 0 4m31s

kube-system calico-node-vz99f 1/1 Running 0 4m31s

kube-system coredns-74ff55c5b-n6zp7 1/1 Running 0 9m18s

kube-system coredns-74ff55c5b-r8gvj 1/1 Running 0 9m18s

kube-system etcd-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-apiserver-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-controller-manager-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

kube-system kube-proxy-6g9zb 1/1 Running 0 9m3s

kube-system kube-proxy-7gjbv 1/1 Running 0 9m18s

kube-system kube-scheduler-capc-cluster-control-plane-tknwx 1/1 Running 0 9m21s

- Pods in capc-cluster -- cluster running in Apache CloudStack with weave net cni

%KUBECONFIG=capc-cluster.kubeconfig kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-thing 0/1 Completed 0 38s

kube-system coredns-5d78c9869d-9xq2s 1/1 Running 0 21h

kube-system coredns-5d78c9869d-gphs2 1/1 Running 0 21h

kube-system etcd-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-apiserver-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-controller-manager-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system kube-proxy-8lfnm 1/1 Running 0 21h

kube-system kube-proxy-brj78 1/1 Running 0 21h

kube-system kube-scheduler-capc-cluster-control-plane-49khm 1/1 Running 0 21h

kube-system weave-net-rqckr 2/2 Running 1 (3h8m ago) 3h8m

kube-system weave-net-rzms4 2/2 Running 1 (3h8m ago) 3h8m

- Pods in original kind cluster (also called bootstrap cluster, management cluster)

% kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

capc-system capc-controller-manager-55798f8594-lp2xs 1/1 Running 0 30m

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-7857cd7bb8-rldnw 1/1 Running 0 30m

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-6cc4b4d964-tz5zq 1/1 Running 0 30m

capi-system capi-controller-manager-7cfcfdf99b-79lr9 1/1 Running 0 30m

cert-manager cert-manager-848f547974-dl7hc 1/1 Running 0 31m

cert-manager cert-manager-cainjector-54f4cc6b5-gfgsw 1/1 Running 0 31m

cert-manager cert-manager-webhook-7c9588c76-5m2b2 1/1 Running 0 31m

kube-system coredns-558bd4d5db-22zql 1/1 Running 0 48m

kube-system coredns-558bd4d5db-7g7kh 1/1 Running 0 48m

kube-system etcd-capi-test-control-plane 1/1 Running 0 48m

kube-system kindnet-7p2dq 1/1 Running 0 48m

kube-system kube-apiserver-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-controller-manager-capi-test-control-plane 1/1 Running 0 48m

kube-system kube-proxy-cwrhv 1/1 Running 0 48m

kube-system kube-scheduler-capi-test-control-plane 1/1 Running 0 48m

local-path-storage local-path-provisioner-547f784dff-f2g7r 1/1 Running 0 48m

This document is to help developers understand how to test CAPC.

Code Origin

The most of the code under test/e2e is from CAPD (Cluster API for Docker) e2e testing (https://github.com/kubernetes-sigs/cluster-api/tree/main/test/e2e) The ACS specific things are under test/e2e/config and test/e2e/data/infrastructure-cloudstack.

e2e

This section describes how to run end-to-end (e2e) testing with CAPC.

Requirements

- Admin access to a Apache CloudStack (ACS) server

- The testing must occur on a host that can access the ACS server

- Docker (download)

- Kind (download)

Environment variables

The first step to running the e2e tests is setting up the required environment variables:

| Environment variable | Description | Default Value |

|---|---|---|

CLOUDSTACK_ZONE_NAME | The zone name | zone1 |

CLOUDSTACK_NETWORK_NAME | The network name. If not exisiting an isolated network with the name is created. | Shared1 |

CLUSTER_ENDPOINT_IP | The cluster endpoint IP | 172.16.2.199 |

CLUSTER_ENDPOINT_IP_2 | The cluster endpoint IP for a second cluster | 172.16.2.199 |

CLOUDSTACK_CONTROL_PLANE_MACHINE_OFFERING | The machine offering for the control plane VM instances | Large Instance |

CLOUDSTACK_WORKER_MACHINE_OFFERING | The machine offering for the worker node VM instances | Medium Instance |

CLOUDSTACK_TEMPLATE_NAME | The machine template for both control plane and worke node VM instances | kube-v1.20.10/ubuntu-2004 |

CLOUDSTACK_SSH_KEY_NAME | The name of SSH key added to the VM instances | CAPCKeyPair6 |

Default values for these variables are defined in config/cloudstack.yaml. This cloudstack.yaml can be completely overridden

by providing make with the fully qualified path of another cloudstack.yaml via environment variable E2E_CONFIG

You will also have to define a k8s secret in a cloud-config.yaml file in the project root, containing a pointer to and credentials for the CloudStack backend that will be used for the test:

apiVersion: v1

kind: Secret

metadata:

name: secret1

namespace: default

type: Opaque

stringData:

api-key: XXXX

secret-key: XXXX

api-url: http://1.2.3.4:8080/client/api

verify-ssl: "false"

This will be applied to the kind cluster that hosts CAPI/CAPC for the test, allowing CAPC to access the cluster.

The api-key and secret-key can be found or generated at Home > Accounts > admin > Users > admin of the ACS management UI. verify-ssl is an optional flag and its default value is true. CAPC skips verifying the host SSL certificates when the flag is set to false.

Running the e2e tests

Run the following command to execute the CAPC e2e tests:

make run-e2e

This command runs all e2e test cases.

You can specify JOB environment variable which value is a regular expression to select test cases to execute. For example,

JOB=PR-Blocking make run-e2e

This command runs the e2e tests that contains PR-Blocking in their spec names.

Debugging the e2e tests

The E2E tests can be debugged by attaching a debugger to the e2e process after it is launched (i.e., make run-e2e). To facilitate this, the E2E tests can be run with environment variable PAUSE_FOR_DEBUGGER_ATTACH=true. (This is only strictly needed when you want the debugger to break early in the test process, i.e., in SynchronizedBeforeSuite. There’s usually quite enough time to attach if you’re not breaking until your actual test code runs.)

When this environment variable is set to true a 15s pause is inserted at the beginning of the test process (i.e., in the SynchronizedBeforeSuite). The workflow is:

- Launch the e2e test: PAUSE_FOR_DEBUGGER_ATTACH=true JOB=MyTest make run-e2e

- Wait for console message: Pausing 15s so you have a chance to attach a debugger to this process...

- Quickly attach your debugger to the e2e process (i.e., e2e.test)

CI/CD for e2e testing

The community has set up a CI/CD pipeline using Jenkins for e2e testing.

How it works

The CI/CD pipeline works as below

- User triggers e2e testing by a Github PR comment, only repository OWNERS and a list of engineers are allowed;

- A program monitors the PR comments of the Github repository, parses the comments and kick Jenkins jobs;

- Jenkins creates a Apache CloudStack with specific version and hypervisor type, if needed;

- Jenkins runs CAPC e2e testing with specific Kubernetes versions and images;

- Jenkins posts the results of e2e testing as a Github PR comment, with the link of test logs.

How to use it

Similar as other prow commands(see here), the e2e testing can be triggered by PR comment /run-e2e:

Usage: /run-e2e [-k Kubernetes_Version] [-c CloudStack_Version] [-h Hypervisor] [-i Template/Image]

[-f Kubernetes_Version_Upgrade_From] [-t Kubernetes_Version_Upgrade_To]

- Supported Kubernetes versions are: [‘1.27.2’, ‘1.26.5’, ‘1.25.10’, ‘1.24.14’, ‘1.23.3’, ‘1.22.6’]. The default value is ‘1.27.2’.

- Supported CloudStack versions are: [‘4.18’, ‘4.17’, ‘4.16’]. If it is not set, an existing environment will be used.

- Supported hypervisors are: [‘kvm’, ‘vmware’, ‘xen’]. The default value is ‘kvm’.

- Supported templates are: [‘ubuntu-2004-kube’, ‘rockylinux-8-kube’]. The default value is ‘ubuntu-2004-kube’.

- By default it tests Kubernetes upgrade from version ‘1.26.5’ to ‘1.27.2’.

Examples

- Examples of

/run-e2ecommands

/run-e2e

/run-e2e -k 1.27.2 -h kvm -i ubuntu-2004-kube

/run-e2e -k 1.27.2 -c 4.18 -h kvm -i ubuntu-2004-kube -f 1.26.5 -t 1.27.2

- Example of test results

Test Results : (tid-126)

Environment: kvm Rocky8(x3), Advanced Networking with Management Server Rocky8

Kubernetes Version: v1.27.2

Kubernetes Version upgrade from: v1.26.5

Kubernetes Version upgrade to: v1.27.2

CloudStack Version: 4.18

Template: ubuntu-2004-kube

E2E Test Run Logs: https://github.com/blueorangutan/capc-prs/releases/download/capc-pr-ci-cd/capc-e2e-artifacts-pr277-sl-126.zip

[PASS] When testing Kubernetes version upgrades Should successfully upgrade kubernetes versions when there is a change in relevant fields

[PASS] When testing subdomain Should create a cluster in a subdomain

[PASS] When testing K8S conformance [Conformance] Should create a workload cluster and run kubetest

[PASS] When testing app deployment to the workload cluster with slow network [ToxiProxy] Should be able to download an HTML from the app deployed to the workload cluster

[PASS] When testing multiple CPs in a shared network with kubevip Should successfully create a cluster with multiple CPs in a shared network

[PASS] When testing resource cleanup Should create a new network when the specified network does not exist

[PASS] When testing app deployment to the workload cluster with network interruption [ToxiProxy] Should be able to create a cluster despite a network interruption during that process

[PASS] When testing node drain timeout A node should be forcefully removed if it cannot be drained in time

[PASS] When testing machine remediation Should replace a machine when it is destroyed

[PASS] When testing with custom disk offering Should successfully create a cluster with a custom disk offering

[PASS] When testing horizontal scale out/in [TC17][TC18][TC20][TC21] Should successfully scale machine replicas up and down horizontally

[PASS] When testing MachineDeployment rolling upgrades Should successfully upgrade Machines upon changes in relevant MachineDeployment fields

[PASS] with two clusters should successfully add and remove a second cluster without breaking the first cluster

[PASS] When testing with disk offering Should successfully create a cluster with disk offering

[PASS] When testing affinity group Should have host affinity group when affinity is pro

[PASS] When testing affinity group Should have host affinity group when affinity is anti

[PASS] When the specified resource does not exist Should fail due to the specified account is not found [TC4a]

[PASS] When the specified resource does not exist Should fail due to the specified domain is not found [TC4b]

[PASS] When the specified resource does not exist Should fail due to the specified control plane offering is not found [TC7]

[PASS] When the specified resource does not exist Should fail due to the specified template is not found [TC6]

[PASS] When the specified resource does not exist Should fail due to the specified zone is not found [TC3]

[PASS] When the specified resource does not exist Should fail due to the specified disk offering is not found

[PASS] When the specified resource does not exist Should fail due to the compute resources are not sufficient for the specified offering [TC8]

[PASS] When the specified resource does not exist Should fail due to the specified disk offer is not customized but the disk size is specified

[PASS] When the specified resource does not exist Should fail due to the specified disk offer is customized but the disk size is not specified

[PASS] When the specified resource does not exist Should fail due to the public IP can not be found

[PASS] When the specified resource does not exist When starting with a healthy cluster Should fail to upgrade worker machine due to insufficient compute resources

[PASS] When the specified resource does not exist When starting with a healthy cluster Should fail to upgrade control plane machine due to insufficient compute resources

[PASS] When testing app deployment to the workload cluster [TC1][PR-Blocking] Should be able to download an HTML from the app deployed to the workload cluster

Ran 28 of 29 Specs in 10458.173 seconds

SUCCESS! -- 28 Passed | 0 Failed | 0 Pending | 1 Skipped

PASS

Releasing Cluster API Provider for CloudStack

Prerequisites:

-

Please install the following tools :

-

Set up and log in to gcloud by running

gcloud init

Note

In order to publish any artifact, you need to be a member of the k8s-infra-staging-capi-cloudstack group

Creating only the docker container

If you would just like to build only the docker container and upload it rather than creating a release, you can run the following command :

REGISTRY=<your custom registry> IMAGE_NAME=<your custom image name> TAG=<your custom tag> make docker-build

It defaults to gcr.io/k8s-staging-capi-cloudstack/capi-cloudstack-controller:dev

Creating a new release

Run the following command to create the new release artifacts as well as publish them to the upstream gcr.io repository:

RELEASE_TAG=<your custom tag> make release-staging

Create the necessary release in GitHub along with the following artifacts ( found in the out directory after running the previous command )

- metadata.yaml

- infrastructure-components.yaml

- cluster-template*.yaml

Note

- The

RELEASE_TAGshould be in the format ofv<major>.<minor>.<patch>. For example,v0.6.0- For RC releases, the

RELEASE_TAGshould be in the format ofv<major>.<minor>.<patch>-rc<rc-number>. For example,v0.6.0-rc1- Before creating the release, ensure that the

metadata.yamlfile is updated with the latest release information.